Security Architecture for google cloud datalakes

Srinivasa Rao • April 21, 2020

Security design for Cloud data lakes

1 Introduction

This blog explains overall security architecture on GCP briefly and puts together the data lake security design and implementation steps.

2 Layers of Security

● Infrastructure and cloud platform security

● Identity access management

● Key management service

● Cloud security scanner

● Security keys

2.1 Infrastructure and cloud platform security

Google or any other cloud provider has security built through layers, from data center security all the way to application and information management.

The lowest layer is the hardware infrastructure security, which includes physical security, hardware security, and machine boot stack security. Google designs and fully owns and manages its data centers, and access to these data centers is highly restricted.

2.2 Identity and access management (IAM)

IAM allows us to define users and roles and help control user access to GCP resources. GCP offers Cloud IAM, which allows us to grant granular access to users for specific GCP resources based on the least privilege security principle.

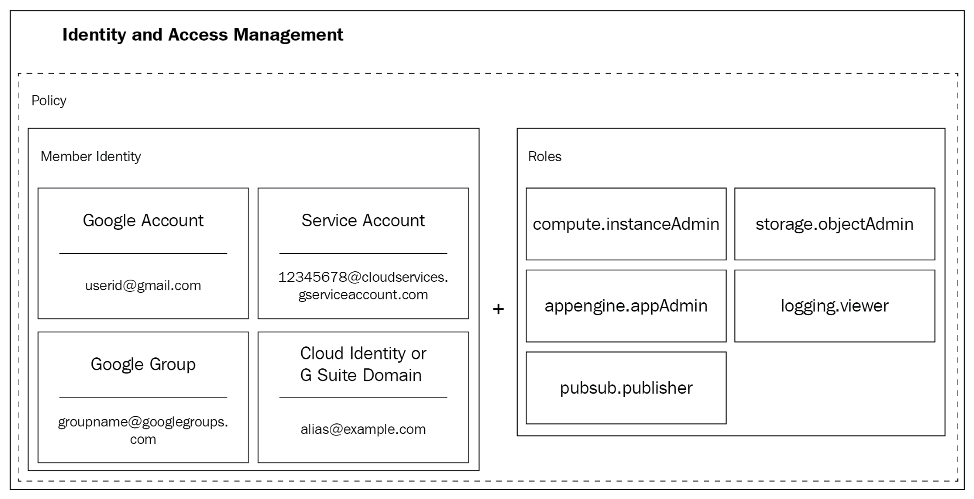

Cloud IAM is made up of members to whom access is granted. The following diagram shows the different kinds of member types and also roles, which are collections of permissions. When a member is authenticated and makes a request, Cloud IAM uses roles to assess whether that member is allowed to perform an operation on a resource:

The above diagram is courtesy to GCP.

2.2.1 Types of member accounts

2.2.1.1 Google Account

Any person who interacts with GCP by a Google account. This person can be a developer, an administrator, or an end user with access to GCP.

2.2.1.2 Service Account

A service account is an account that is associated with an application. Code that runs on your GCP account runs under a service account. Multiple service accounts can be created and associated with different parts of your application. Service accounts help create granular access and permissions.

2.2.1.3 Google Group

A Google group is a collection of Google accounts and service accounts. Google groups makes it easier to apply an access policy to a collection of users. Groups also make it easy to add and remove members.

2.2.1.4 G Suite Domain

Google offers organizations a G Suite Account, which offers email, calendar, docs, drive, and other enterprise services. A G Suite domain represents a virtual group of all Google accounts created in an organization's G Suite account.

2.2.1.5 Cloud Identity Domain

The primary difference between a Cloud Identity Domain and a G Suite domain is that all users in the Cloud Identity Domain do not have access to G Suite applications and features.

2.2.2 Elements of IAM

The primary elements of IAM are as follows:

● Identities and groups

● Resources

● Permissions

● Roles

● Policies

2.2.2.1 Identities and groups

Identities and groups are entities that are used to grant access permissions to users.

2.2.2.1.1 Identities

An identity is an entity that represents a person or other agent that performs actions on a GCP resource. Identities are sometimes called members. There are several kinds of identities:

● Google account

● Service account

● Cloud Identity domain

2.2.2.1.2 Groups

Groups are useful for assigning permissions to sets of users. When a user is added to a group, that user acquires the permissions granted to the group. Similarly, when a user is removed from the group, they no longer receive permissions from the group. Google Groups do not have login credentials, and therefore they cannot be used as an identity.

2.2.2.2 Roles

A role is a collection of permissions that determine what operations are allowed on any resource. Roles are granted to users, and all permissions that role contains are applied to that user. Permissions are represented in the form of <service>.<resource>.<verb>, for example, compute.instances.delete. Resource here is a GCP resource such as projects, compute engine instances, cloud storage buckets, and so on.

There are three types of roles:

● Predefined

● Primitive (viewer, writer and so on)

● Custom

2.2.2.3 Resources

Resources are entities that exist in the Google Cloud platform and can be accessed by users. Resources is a broad category that essentially includes anything that we can create in GCP. Resources include the following:

● Projects

● Virtual machines

● App Engine applications

● Cloud Storage buckets

● Pub/Sub topics

● Big Query dataset or table

Google has defined a set of permissions associated with each kind of resource. Permissions vary according to the functionality of the resource.

2.2.2.4 Permissions

A permission is a grant to perform some action on a resource. Permissions vary by the type of resource with which they are associated. Storage resources will have permissions related to creating, listing, and deleting data. For example, a user with the bigquery.datasets .create permission can create tables in BigQuery. Cloud Pub/Sub has a permission called pubsub.subscriptions.consume, which allows users to read from a Cloud Pub/Sub topic.

Here are some examples of other permissions used by Compute Engine:

● compute.instances.get

● compute.networks.use

● compute.securityPolicies.list

Here are some permissions used with Cloud Storage:

● resourcemanager.projects.get

● resourcemanager.projects.list

● storage.objects.create

2.2.2.5 IAM policy

An IAM policy is a collection of statements that define the type of access a user gets. A policy is assigned to a resource and is used to govern access to that resource. An IAM policy can be set to any level in a resource hierarchy. You can set the policy at the organization level, the folder level, the project level, or at the resource level. Policies are inherited from parents so if we set a policy at the organization level, all the projects will inherit that policy, which in turn is inherited by all resources in those projects.

In addition to granting roles to identities, we can associate a set of roles and permissions with resources by using policies. A policy is a set of statements that define a combination of users and the roles. This combination of users (or members as they are sometimes called) and a role is called a binding. Policies are specified using JSON.

● A user group can have one or more users as members.

● A user can belong to one or more user groups.

● A user can be assigned one or more roles.

● A role can be assigned to one or more users.

● A role can access one or more resources through permission control.

● One permission is granted to one or more roles.

● One resource can only have one corresponding permission for each role.

3 Teams and Responsibilities

Here are some ideas on how GCP is controlled by various teams. Based on the responsibilities, various teams are responsible for managing security around those functions and also securing GCP resources and data within their parameters.

4 Security best practices

The following are some good security practices in brief that need to be kept in mind while implementing any security at any level.

4.1 Least privilege

Least privilege is the practice of granting only the minimal set of permissions needed to perform a duty. IAM roles and permissions are fine-grained and enable the practice of least privilege.

4.2 Auditing

Auditing is basically reviewing what has happened on our system. In the case of Google Cloud, there are a number of sources of logging information that can provide background details on what events occurred on your system and who executed those actions.

4.3 Policy Management

● Set organization-level Cloud IAM policies to grant access to all projects in your organization.

● Grant roles to a Google group instead of individual users when possible. It is easier to add members to and remove members from a Google group instead of updating a Cloud IAM policy to add or remove users.

● If we need to grant multiple roles to allow a particular task, create a Google group, grant the roles to that group, and then add users to that group.

4.4 Separation of duties

Separation of duties (SoD) is the practice of limiting the responsibilities of a single individual in order to prevent the person from successfully acting alone in a way detrimental to the organization.

4.5 Defense in Depth

Defense in depth is the practice of using more than one security control to protect resources and data. For example, to prevent unauthorized access to a database, a user attempting to read the data may need to authenticate to the database and must be executing the request from an IP address that is allowed by firewall rules.

5 Encryption

5.1 Data at Rest encryption

Encryption at rest is key for securing our data. By default, Cloud Storage will always encrypt our data on the server side before it is written to disk. There are 3 options available for server-side encryption:

● Google-Managed Encryption Keys: This is where Cloud Storage will manage encryption keys on behalf of the customer, with no need for further setup. This also does key rotation and preferred way of doing it.

● Customer-Supplied Encryption Keys (CSEKs): This is where the customer creates and manages their own encryption keys.

● Customer-Managed Encryption Keys (CMEKs): This is where the customer generates and manages their encryption keys using GCP's Key Management Service (KMS).

It is recommended to use default Google-Managed Encryption on all our buckets in normal cases. Big Query encryption at rest is managed by service itself.

There is also the client-side encryption option where encryption occurs before data is sent to Cloud Storage and additional encryption takes place at the server side.

5.2 Data in Motion encryption

As we are using google proved services, how the data moved internally is being managed by google and we don't have control over it.

But we need to implement encryption in the following cases:

● Use TLS/SSL connections between front-end (like Tableau) and Big Query.

● All the files that are transferred between GCP and on-premise need to be encrypted. Use SFTP instead of FTP.

5.3 Column level encryption

Certain columns that have personal identifiable information may need to be encrypted within the Big Query tables. This is on top of data at rest encryption.

6 Data Level Security

As we are planning to use only cloud stores and Big Query for our data lake needs, this section mainly focuses around them.

6.1 Process

Initially, when a data lake project is created in each environment, we will create all required groups with required people in it along with roles assigned. After that whenever we need to make changes like creating groups, adding people to groups and granting permissions to groups, we need a serviceNow (or similar tool) request with approvals from proper authority.

All the individuals get access through proper groups rather than direct access. The following process diagram explains the process that needs to be followed to get access to the data lake resources.

6.2 Cloud store

Access to Google Cloud Storage is secured with IAM. The following are the list of predefined roles and their details:

● Storage Object Creator: Has rights to create objects but does not give permissions to view, delete, or overwrite objects

● Storage Object Viewer: Has rights to view objects and their metadata, but not the ACL, and has rights to list the objects in a bucket

● Storage Object Admin: Has full control over objects and can create, view, and delete objects.

● Storage Admin: Has full control over buckets and objects.

Cloud Storage also offers security via ACLs. Below are the permissions available and their details:

● Reader: This can be applied to a bucket or an object. It has the right to list a bucket's contents and read bucket metadata. It also has the right to download an object's data.

● Writer: This can be applied to a bucket only and has the rights to list, create, overwrite, and delete objects in a bucket.

● Owner: This can be applied to a bucket or an object. It grants reader and writer permissions to the bucket and grants reader access to an object.

6.3 Big Query

Access to BigQuery is secured through IAM. The following are the predefined roles:

● BigQuery User: This has rights to run jobs within the project. It can also create new datasets. Most individuals in an organization should be a user.

● BigQuery Job User: This has rights to run jobs within the project.

● BigQuery Read Session User: This has rights to create and read sessions within the project via the BigQuery storage API.

● BigQuery Data Viewer: This has rights to read the dataset metadata and list tables in the dataset. It can also read data and metadata from the dataset tables.

● BigQuery Metadata Viewer: This has rights to list all datasets and read metadata for all datasets in the project. It can also list all tables and views and read metadata for all tables and views in the project.

● BigQuery Data Editor: This has rights to read the dataset metadata and to list tables in the dataset. It can create, update, get, and delete the dataset tables.

● BigQuery Data Owner: This has rights to read, update, and delete the dataset. It can also create, update, get and delete the dataset tables.

● BigQuery Admin: This has rights to manage all resources within the project.

7 Data Security Implementation

The following flowchart explains how access requests can be fulfilled through an automated process.

Google Security APIs are available here:

https://cloud.google.com/security-command-center/docs/reference/rest/

Programmatically setting up access:

https://cloud.google.com/security-command-center/docs/how-to-programmatic-access

8 Logging, Monitoring and Auditing

It is very important to log all security related events like who is accessing what resources, failed attempts and so on into a central repository like google stack driver and monitor it on regular purpose.

This section will be explained in more detail in the Cloud operations blog.

9 Other Google Security services

9.1 Data Loss Protection

The data loss prevention (DLP) API provides a programmatic way to classify 90+ types of data. For example, if we have a spreadsheet with financial and/or personal information, parsing this data with the DLP API masks and hides all this sensitive information. With this API, we can easily classify and redact sensitive data.

9.2 Cloud Security Scanner

Cloud security scanner is built to identify vulnerabilities in our Google App Engine applications. The scanner crawls through our application and can attempt to try multiple user inputs in order to detect vulnerabilities. The scanner can detect a range of vulnerabilities, such as XSS, flash injection, mixed content, clear text passwords, and use of JavaScript libraries.

Cloud security scanner can only be used with the Google App Engine standard environment and compute engine.

9.3 Key Management Service (KMS)

Cloud KMS is a hosted KMS that lets us manage encryption keys in the cloud. We can create/generate, rotate, use, and destroy AES256 encryption keys just like we would in our on-premises environments. We can also use the cloud KMS REST API to encrypt and decrypt data.

The author has extensive experience in Big Data Technologies and worked in the IT industry for over 25 years at various capacities after completing his BS and MS in computer science and data science respectively. He is certified cloud architect and holds several certifications from Microsoft and Google. Please contact him at srao@unifieddatascience.com if any questions.

Database types Realtime DB The database should be able to scale and keep up with the huge amounts of data that are coming in from streaming services like Kafka, IoT and so on. The SLA for latencies should be in milliseconds to very low seconds. The users also should be able to query the real time data and get millisecond or sub-second response times. Data Warehouse (Analytics) A data warehouse is specially designed for data analytics, which involves reading large amounts of data to understand relationships and trends across the data. The data is generally stored in denormalized form using Star or Snowflake schema. Data warehouse is used in a little broader scope, I would say we are trying to address Data Marts here which is a subset of the data warehouse and addresses a particular segment rather than addressing the whole enterprise. In this use case, the users not only query the real time data but also do some analytics, machine learning and reporting. OLAP OLAP is a kind of data structure where the data is stored in multi-dimensional cubes. The values (or measures) are stored at the intersection of the coordinates of all the dimensions.

This blog puts together Infrastructure and platform architecture for modern data lake. The following are taken into consideration while designing the architecture: Should be portable to any cloud and on-prem with minimal changes. Most of the technologies and processing will happen on Kubernetes so that it can be run on any Kubernetes cluster on any cloud or on-prem. All the technologies and processes use auto scaling features so that it will allocate and use resources minimally possible at any given time without compromising the end results. It will take advantage of spot instances and cost-effective features and technologies wherever possible to minimize the cost. It will use open-source technologies to save licensing costs. It will auto provision most of the technologies like Argo workflows, Spark, Jupyterhub (Dev environment for ML) and so on, which will minimize the use of the provider specific managed services. This will not only save money but also can be portable to any cloud or multi-cloud including on-prem. Concept The entire Infrastructure and Platform for modern data lakes and data platform consists of 3 main Parts at very higher level: Code Repository Compute Object store The main concept behind this design is “Work anywhere at any scale” with low cost and more efficiently. This design should work on any cloud like AWS, Azure or GCP and on on-premises. The entire infrastructure is reproducible on any cloud or on-premises platform and make it work with some minimal modifications to code. Below is the design diagram on how different parts interact with each other. The only pre-requisite to implement this is Kubernetes cluster and Object store.

Spark-On-Kubernetes is growing in adoption across the ML Platform and Data engineering. The goal of this blog is to create a multi-tenant Jupyter notebook server with built-in interactive Spark sessions support with Spark executors distributed as Kubernetes pods. Problem Statement Some of the disadvantages of using Hadoop (Big Data) clusters like Cloudera and EMR: Requires designing and build clusters which takes a lot of time and effort. Maintenance and support. Shared environment. Expensive as there are a lot of overheads like master nodes and so on. Not very flexible as different teams need different libraries. Different cloud technologies and on-premises come with different sets of big data implementations. Cannot be used for a large pool of users. Proposed solution The proposed solution contains 2 parts, which will work together to provide a complete solution. This will be implemented on Kubernetes so that it can work on any cloud or on-premises in the same fashion. I. Multi-tenant Jupyterhub JupyterHub allows users to interact with a computing environment through a webpage. As most devices have access to a web browser, JupyterHub makes it easy to provide and standardize the computing environment of a group of people (e.g., for a class of data scientists or an analytics team). This project will help us to set up our own JupyterHub on a cloud and leverage the cloud's scalable nature to support large groups of users. Thanks to Kubernetes, we are not tied to a specific cloud provider. II. Spark on Kubernetes (SPOK) Users can spin their own spark resources by creating sparkSession. Users can request several executors, cores per executor, memory per executor and driver memory along with other options. The Spark environment will be ready within a few seconds. Dynamic allocation will be used if none of those options are chosen. All the computes will be terminated if they’re idle for 30 minutes (or can be set by the user). The code will be saved to persistent storage and available when the user logs-in next time. Data Flow Diagram

Data Governance on cloud is a vast subject. It involves lot of things like security and IAM, Data cataloging, data discovery, data Lineage and auditing. Security Covers overall security and IAM, Encryption, Data Access controls and related stuff. Please visit my blog for detailed information and implementation on cloud. https://www.unifieddatascience.com/security-architecture-for-google-cloud-datalakes Data Cataloging and Metadata It revolves around various metadata including technical, business and data pipeline (ETL, dataflow) metadata. Please refer to my blog for detailed information and how to implement it on Cloud. https://www.unifieddatascience.com/data-cataloging-metadata-on-cloud Data Discovery It is part of the data cataloging which explained in the last section. Auditing It is important to audit is consuming and accessing the data stored in the data lakes, which is another critical part of the data governance. Data Lineage There is no tool that can capture data lineage at various levels. Some of the Data lineage can be tracked through data cataloging and other lineage information can be tracked through few dedicated columns within actual tables. Most of the Big Data databases support complex column type, it can be tracked easily without much complexity. The following are some examples of data lineage information that can be tracked through separate columns within each table wherever required. 1. Data last updated/created (add last updated and create timestamp to each row). 2. Who updated the data (data pipeline, job name, username and so on - Use Map or Struct or JSON column type)? 3. How data was modified or added (storing update history where required - Use Map or Struct or JSON column type). Data Quality and MDM Master data contains all of your business master data and can be stored in a separate dataset. This data will be shared among all other projects/datasets. This will help you to avoid duplicating master data thus reducing manageability. This will also provide a single source of truth so that different projects don't show different values for the same. As this data is very critical, we will follow type 2 slowly changing dimensional approach which will be explained my other blog in detail. https://www.unifieddatascience.com/data-modeling-techniques-for-modern-data-warehousing There are lot of MDM tools available to manage master data more appropriately but for moderate use cases, you can store this using database you are using. MDM also deals with central master data quality and how to maintain it during different life cycles of the master data. There are several data governance tools available in the market like Allation, Collibra, Informatica, Apache Atlas, Alteryx and so on. When it comes to Cloud, my experience is it’s better to use cloud native tools mentioned above should be suffice for data lakes on cloud/