Infrastructure and Platform Architecture – Modern data lakes and databases

- Should be portable to any cloud and on-prem with minimal changes.

- Most of the technologies and processing will happen on Kubernetes so that it can be run on any Kubernetes cluster on any cloud or on-prem.

- All the technologies and processes use auto scaling features so that it will allocate and use resources minimally possible at any given time without compromising the end results.

- It will take advantage of spot instances and cost-effective features and technologies wherever possible to minimize the cost.

- It will use open-source technologies to save licensing costs.

- It will auto provision most of the technologies like Argo workflows, Spark, Jupyterhub (Dev environment for ML) and so on, which will minimize the use of the provider specific managed services. This will not only save money but also can be portable to any cloud or multi-cloud including on-prem.

- Code Repository

- Compute

- Object store

Object store is the critical part of the design and holds all the persistent data including entire data lake, Deep storage for databases (metadata, catalogs and online data) and stores logs or any other information that need to be persisted. Different policies will be created to archive and purge data based on the retention polices and compliance.

Code Repository

Entire Infrastructure, software and data pipelines will be created using a code repository which will play very key role in this project. Entire “compute” can be created using code repository in conjunction with devOps toolset.

Compute

All the Compute will be created on Kubernetes using code repository and CI/CD pipelines. It can work on any cloud or on-premises if Kubernetes environment is available.

We are planning to run most of the processing on spot instances as much as possible if we implement this on cloud, which will significantly reduce the cost. The ideal target for the entire Compute is 30% (or less) on regular instances and 70% (or more) on spot instances.

Technical Architecture

The following is the proposed infrastructure and platform technical architecture, which should satisfy all the Data Lake project technical needs. Most of the technologies will be built as self-serve capabilities using automation tools so that the developers, Data Scientists and ML Engineers can use them as needed.

Data Processing and ML Training

Most of the work is done during this stage of the project and most of the cost incurred for the project happens here.

Argo workflow and Argo Events

Argo is an open-source Workflow Engine for orchestrating tasks on Kubernetes. Argo allows us to create and run advanced workflows entirely on your Kubernetes cluster. Argo Workflows is built on top of Kubernetes, and each task is run as a separate Kubernetes pod. Many reputable organizations in the industry use Argo Workflows for ML (Machine Learning), ETL (Extract, Transform, Load), Data Processing, and CI/CD Pipelines.

Argo is basically a Kubernetes extension and hence, it is installed using Kubernetes. Argo Workflows allows organizations to define their tasks as DAGs using YAML. Argo comes with a native Workflow Archive for auditing, Cron Workflows for scheduled workflows, and a fully featured REST API. Argo comes with a list of killer features that set it apart from similar products.

Note: the above diagram is courtesy of Argo documentation.

Argo Events is an event-driven workflow automation framework for Kubernetes which helps us to trigger K8s objects, Argo Workflows, Serverless workloads, etc. on events from a variety of sources like webhooks, S3, schedules, messaging queues, gcp pubsub, sns, sqs, etc.

Note: the above diagram is courtesy of Argo documentation.

Key Features and advantages of Argo

Open-Source: Argo is a fully open-source project at the Cloud Native Computing Foundation (CNCF). It is available free of cost for everyone to use.

Native Integrations: Argo comes with native artifact support to download, transport, and upload files during runtime. It supports any S3 compatible Artifact Repository such as AWS, GCS, HTTP, Git, Raw, and Minio. Also, it’s easy to integrate with Spark using SPOK (Spark on Kubernetes). It can be used to run Kubeflow ML pipelines.

Scalability: Argo Workflows has robust retry mechanisms for high reliability and is highly scalable. It can manage thousands of pods and workflows in parallel.

Customizability: Argo is highly customizable, and it supports templating and composability to create and reuse workflows.

Powerful User Interface: Argo comes with a fully featured User Interface (UI) that is easy to use. Argo Workflows v3.0 UI also supports Argo Events and is more robust and reliable. It has embeddable widgets and a new workflow log viewer.

Cost savings: We can run a lot of processing on Spot instances where permitted, which saves lot of cost.

Spark (SPOK)

It is recommended to use Spark for most of the processing wherever possible including data ingestion, transformation and for ML training. Spark is a reliable and proven distributed processing engine and can process the ETL pipelines very faster. This is heavily used in the industry and standard for any big data and data lake project. We can also leverage on the spot instances for spark processing that reduces the overall cost of the project.

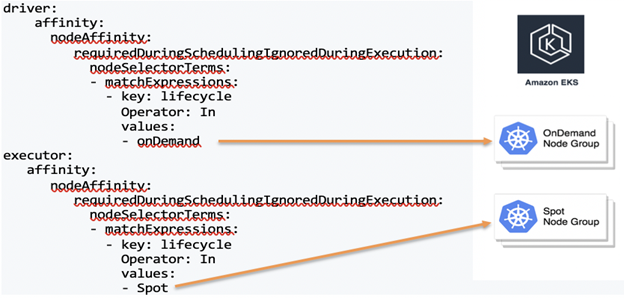

The following is an example of how we can effectively run Spark on Spot node pools. As all the processing happens on executor nodes. Running executors on spot node pools tremendously saves cost. Spark also can reprocess any failed tasks automatically which makes it a reliable candidate to run workloads on spot instances.New Paragraph

ArgoCD

ArgoCD allows us to use custom or vendor provided images to process the incoming data and any other workloads. We can also run this on Spot instance node pool with retry’s. Some enhancements may be required to run them on Spot instance node pools.

Hive

Hive can be used to access data stored on any file system using SQL. It can be used for metadata cataloging as well. Hive also comes with ACID transactional features, which can be used for delta lake use cases if any.

Delta lake or Hive Transactional

Delta lake allows row level manipulation of data and can-do time travel on data. Hive transactional, Databricks and Iceberg are some of the technologies that will facilitate delta lake on Big Data.

Streaming (Kafka)

Kafka or similar technologies can be used where we need to process non file based incoming data in real-time.

JupyterHub

JupyterHub brings the power of notebooks to groups of users. It gives users access to computational environments and resources without burdening the users with installation and maintenance tasks. Users - including engineers and data scientists - can get their work done in their own workspaces on shared resources which can be managed efficiently by system administrators.

JupyterHub runs on Kubernetes makes it possible to serve a pre-configured data science environment to any user.

Persistent data storage

Object Store (S3, Azure Blob, GCS) plays a very critical role in storing all the data, which is a key part of this project. The following are some of the ways to store and process the data from Object store cost effectively:

❖ Compress data effectively. This will reduce storage costs, IO cost and reduce processing times.

❖ Retain only required data. Have an Archival process in place.

❖ Partition data effectively. This will reduce the IO costs when processing the data.

❖ Reduce accessing raw data as much as possible by incorporating different presentation layers with minimal data.

❖ Utilize cold storage wherever possible.

Virtualization layer

As accessing data from Object store is cumbersome, it's a good idea to build virtualization layer on top of it. This will bring the following advantages to raw data:

❖ Data can be accessed using SQL statements.

❖ This will serve as a metadata catalog on top of the Object store.

❖ Can use partitioning and other optimization techniques to reduce IO when consuming data from the Object store.

Hive, Athena, Presto and Redshift Spectrum are some of the technologies that can be used for this purpose.

Database (Speed/Consumption data layer)

The OLAP and/or NoSQL database may be required to satisfy the end user data consumption needs. Databases like Starrocks, Druid or Cassandra may be used for this purpose. The selection of the database is based on following requirements:

❖ The volume of the data stored in the database.

❖ Amount of the data ingested by time interval.

❖ How the data is ingested is like real time streaming and/or batch processing.

❖ Response times needed while accessing data by end users.

❖ QPS/TPS

❖ Data access patterns.

Data facilitation and secure data access

This stage is lightweight and facilitates access to the data, which is stored in a persistent data store like database. This will also provide authentication and authorization mechanisms to access the data.

Serverless Cloud functions and/or microservice architecture may be used to facilitate REST APIs.

Orchestration and DevOps

Kubernetes, Docker, and Container services

Kubernetes, Docker, and Container services play key roles in this project. These technologies facilitate most of the components mentioned above. The technologies like Spark will be built and readily available as containers for developers to develop workflows, pipelines and so on using them.

Kubernetes cluster will have different node pools (or groups) to run different workloads. Most of the processing pipelines and model training can run on node pools(groups) with spot instances.

CI/CD

Jenkins (CI) and Argo CD (CD) will be used for continuous integration and continuous deployment into Kubernetes. Terraform may be used for non-Kubernetes related cloud deployments. Some additional tools like Chef or Ansible may be leveraged when the need arises for those technologies.

Git is used as a code repository, which will be integrated with CI/CD pipelines.New Paragraph